Foundations of Geospatial Analysis

Bartlett Centre for Advanced Spatial Analysis, University College London

November 21, 2023

About Me

Professor of Urban Analytics @ Bartlett Centre for Advanced Spatial Analysis (CASA), UCL

Geographer by background - ex-Secondary School Teacher - back in HE for 16+ years

Taught GIS / Spatial Data Science at postgrad level for last 11 years

About this session

Whistle-stop tour of some of the key concepts relating to spatial data

An illustrative example analysing some spatial data in London - demonstrating the “spatial is special” idiom and how we might account for spatial factors in our analysis

All slides and examples are produced in RMarkdown using Quarto and R so everything can be forked and reproduced in your own time later - just go to the Github Repo link below

By the end I hope you’ll all leave with a better introductory understanding of why and how we should pay attention to the influence of space in any analysis

Key Geospatial Concepts

- Where? (absolute)

- Where? (relative)

- Storing where - spatial data

- How near or distant?

- What scale?

- What shape?

Where? (absolute)

Everything happens somewhere

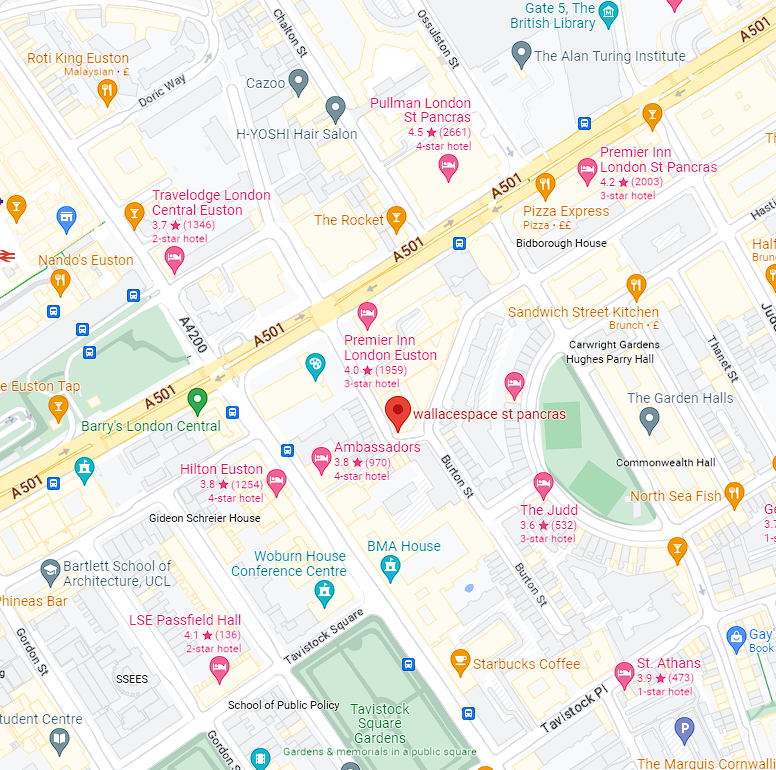

- We’re here: Wallspace, 22 Duke’s Road, Camden, London, England, *Europe, Northern Hemisphere, Earth

Where? (absolute)

Where? Coordinate Reference Systems

More reliable than names (that are rarely unique or reference fuzzy locations), are coordinates

The earth is roughly spherical and points anywhere on its surface can be described using the World Geodetic System (WGS) - a geographic (spherical) coordinate system

Points can be referenced according to their position on a grid of latitudes (degrees north or south of the equator) and longitudes (degrees east or west of the Prime - Greenwich - meridian)

The last major revision of the World Geodetic System was in 1984 and WGS84 is still used today as the standard system for references places on the globe.

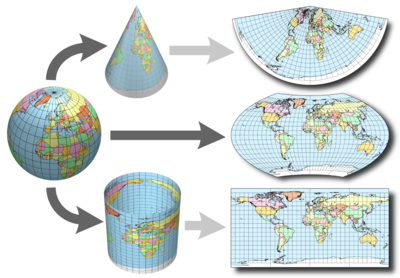

Where? Coordinate Reference Systems

Projected Coordinate Reference Systems convert the 3D globe to a 2D plane and can do so in a huge variety of different ways

Most national mapping agencies have their own projected coordinate systems - in Britain the Ordnance Survey maintain the British National Grid which locates places according to 6-digit Easting and Northing coordinates

Every coordinate system can be referenced by its EPSG code, e.g. WGS84 = 4326 or British National Grid = 27700 with mathematical transformations to convert between them

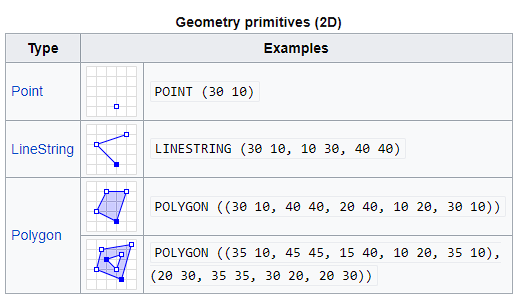

Where? Describing and Locating Things with Coordinates

Once we have a coordinate reference system we can locate objects accurately in space

Most objects that spatial data scientists are concerned with (apart from gridded representations, which we will ignore for now!) can be simplified to either a point, a line or a polygon in that space

Polygons and lines are just multiple point coordinates joined together!

The examples on the right store geometries in the ‘well-known-text’ (WKT) format for representing vector (point, line, polygon) geometries

Storing where - managing spatial data

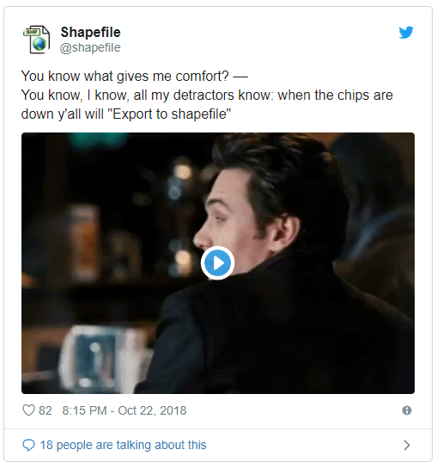

Impossible to talk about spatial data without mentioning the shapefile

Developed in the 1980s by ESRI and has become, pretty much, the de facto standard for storing and sharing spatial data - even though it’s a terrible format!

Shapefiles store geometries (shapes) and attributes (information about those shapes)

Not a single file, actually a collection of files

.shp - geometries

.shx - index

.dbf - attributes

+some others!

Superseded by LOTS of alternative formats - geojson (web), GeoPackage (everything) which do the same thing in better ways for different applications

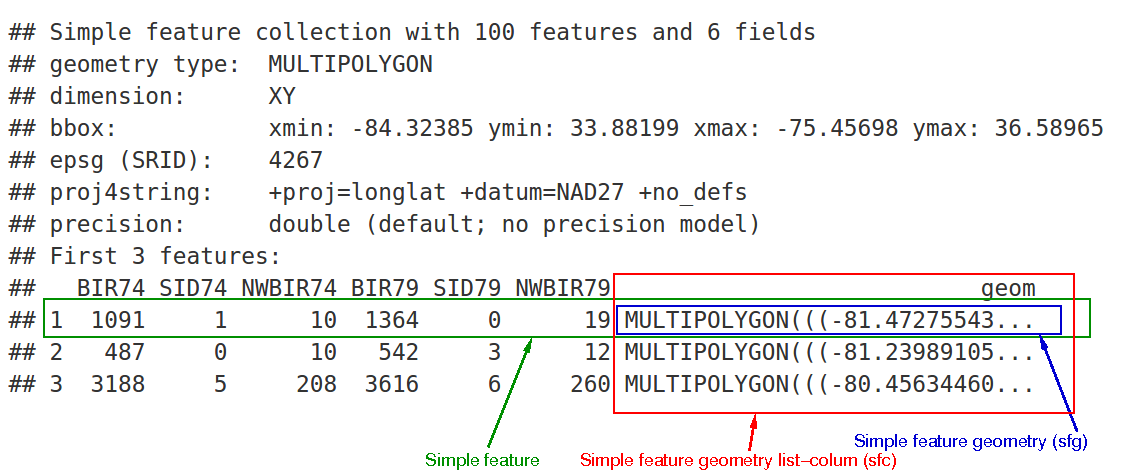

Storing where - Simple Features

Simple Features - OGC (Open Geospatial Consortium) standard that specifies a common storage and access model for 2D geometries

2 part standard:

Part 1 - Common Architecture defining geometries, attributes etc. via WKT

Part 2 - supports storage, retrieval, query and update of simple geospatial feature collections via SQL (structured query language – been around since the 1970s)

Simple Features implemented in most spatially enabled database management systems (e.g. PostGIS extension for PostgreSQL, Oracle Spatial etc.)

sfpackage in R enables storage of spatial data and attributes in a single data frame object

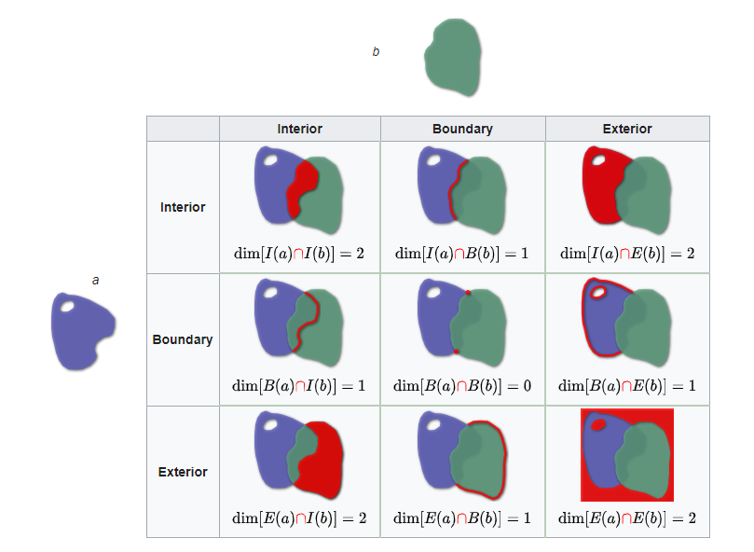

Where? Relative - Tobler’s First Law of Geography

“Everything is related to everything else, but near things are more related than distant things.”

This observation underpins much of what spatial data scientists do

Being able to locate something in space, relative to something else, allows us to:

explain why something may be occurring where it is

make better predictions about nearby or further away things

Underpins the whole Geodeomographics (customer segmentation) industry!!

Where? Relative - John Snow’s Cholera Map

Where? Relative - Defining ‘near’ and ‘distant’

Near and distant can mean different things in different contexts

- the furthest one would travel to buy a pint of milk is somewhat different to furthest one might be willing to commute for a job

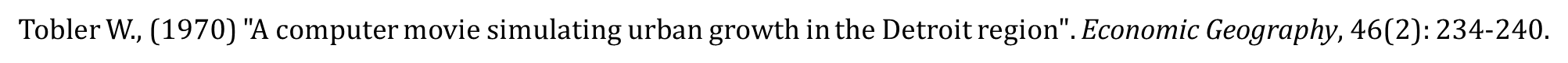

In spatial data science one way of separating near from distant can simply be to define their topological relationship - Dimensionally Extended 9-Intersection Model (DE-9IM) is the standard topological model used in GIS

Touching or overlapping objects = ‘near’

Where? Relative - Exploring Near and Distant

- Near and distant in London

- Map shows 2011 Census Wards in London, within Borough Boundaries

- The Greater London Authority produced the London Ward Atlas - https://data.london.gov.uk/dataset/ward-profiles-and-atlas - which collates a range of demographic and economic indicators for each of these zones in the city

Where? Relative - Exploring Near and Distant

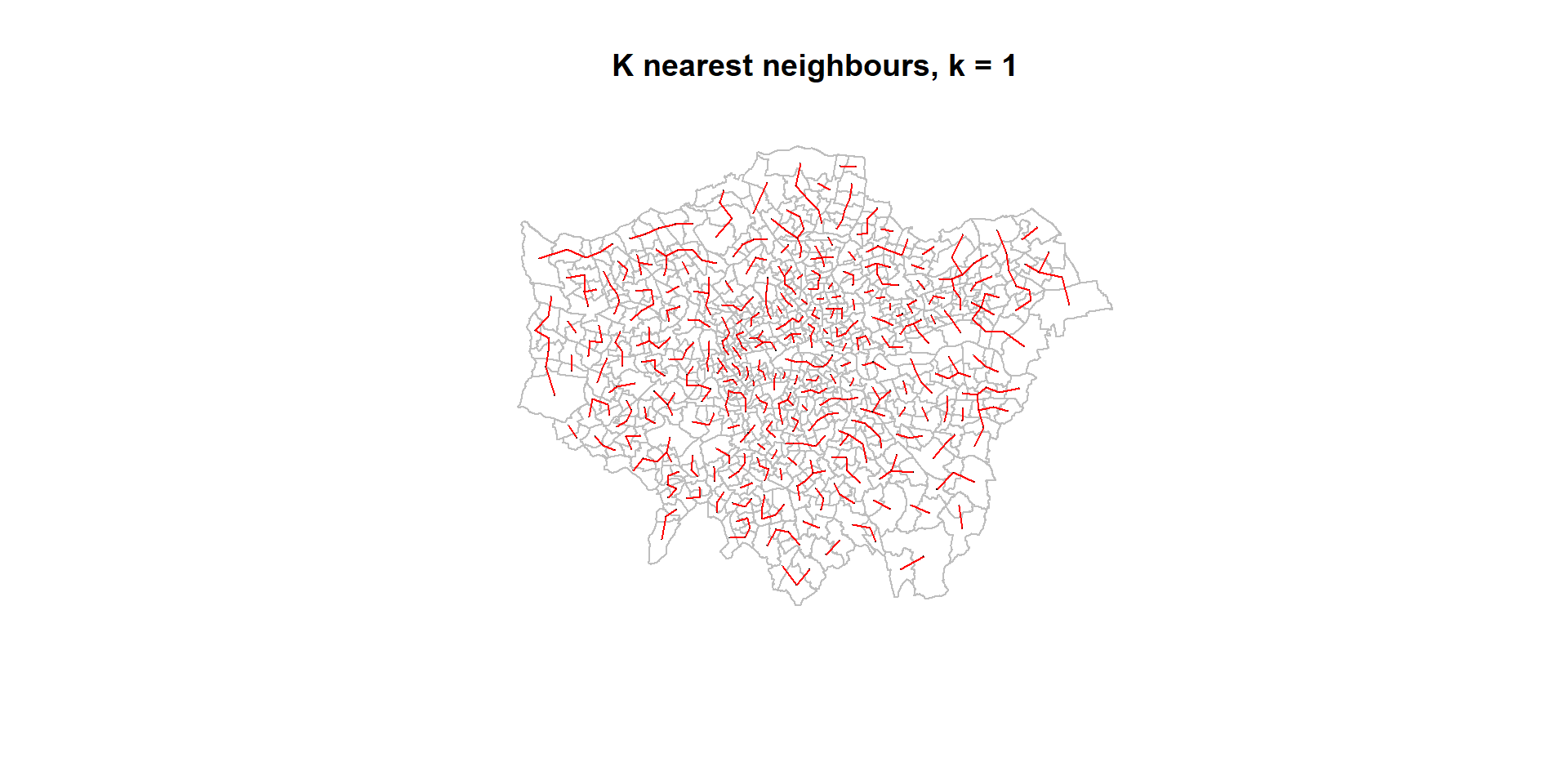

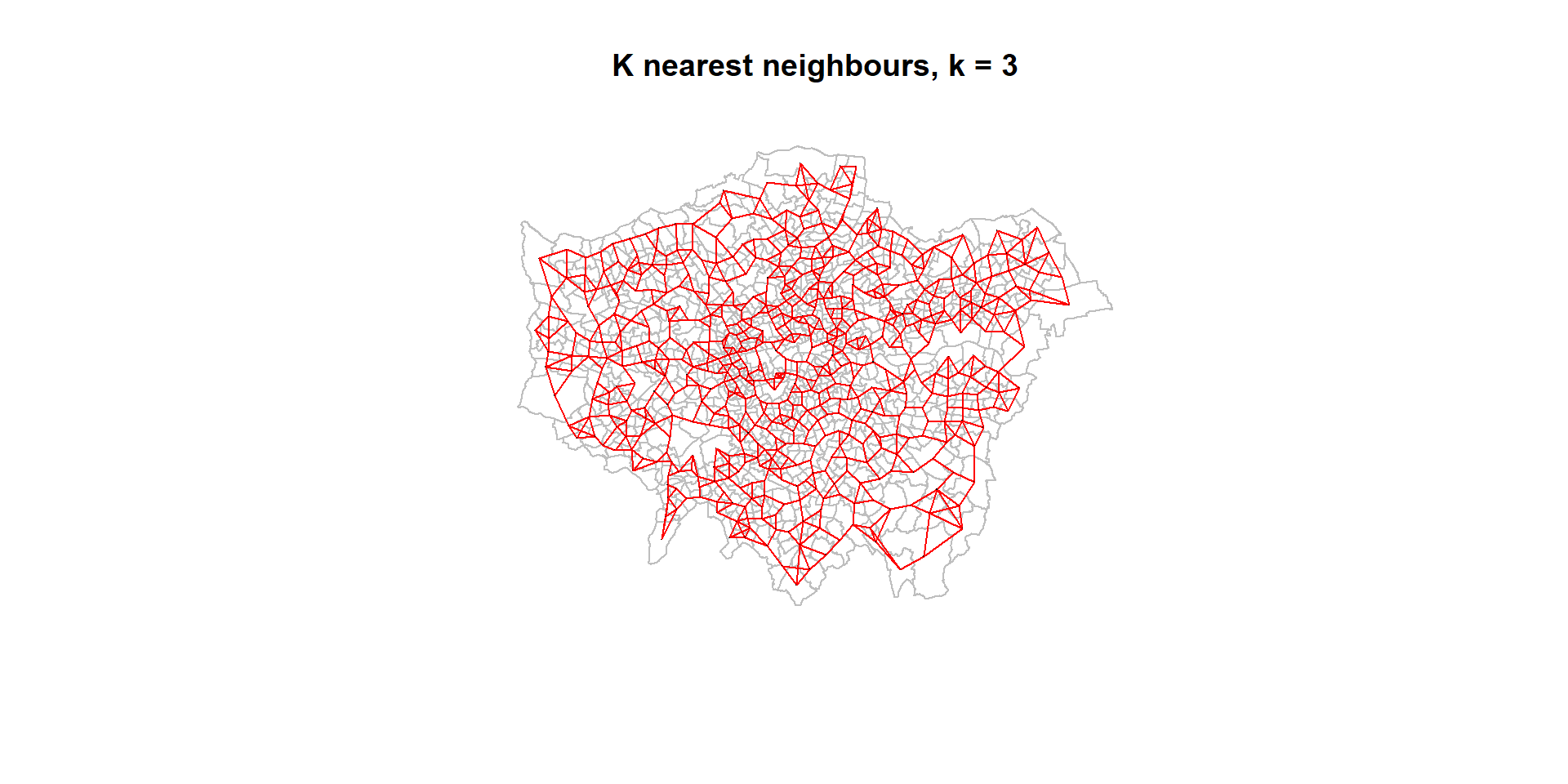

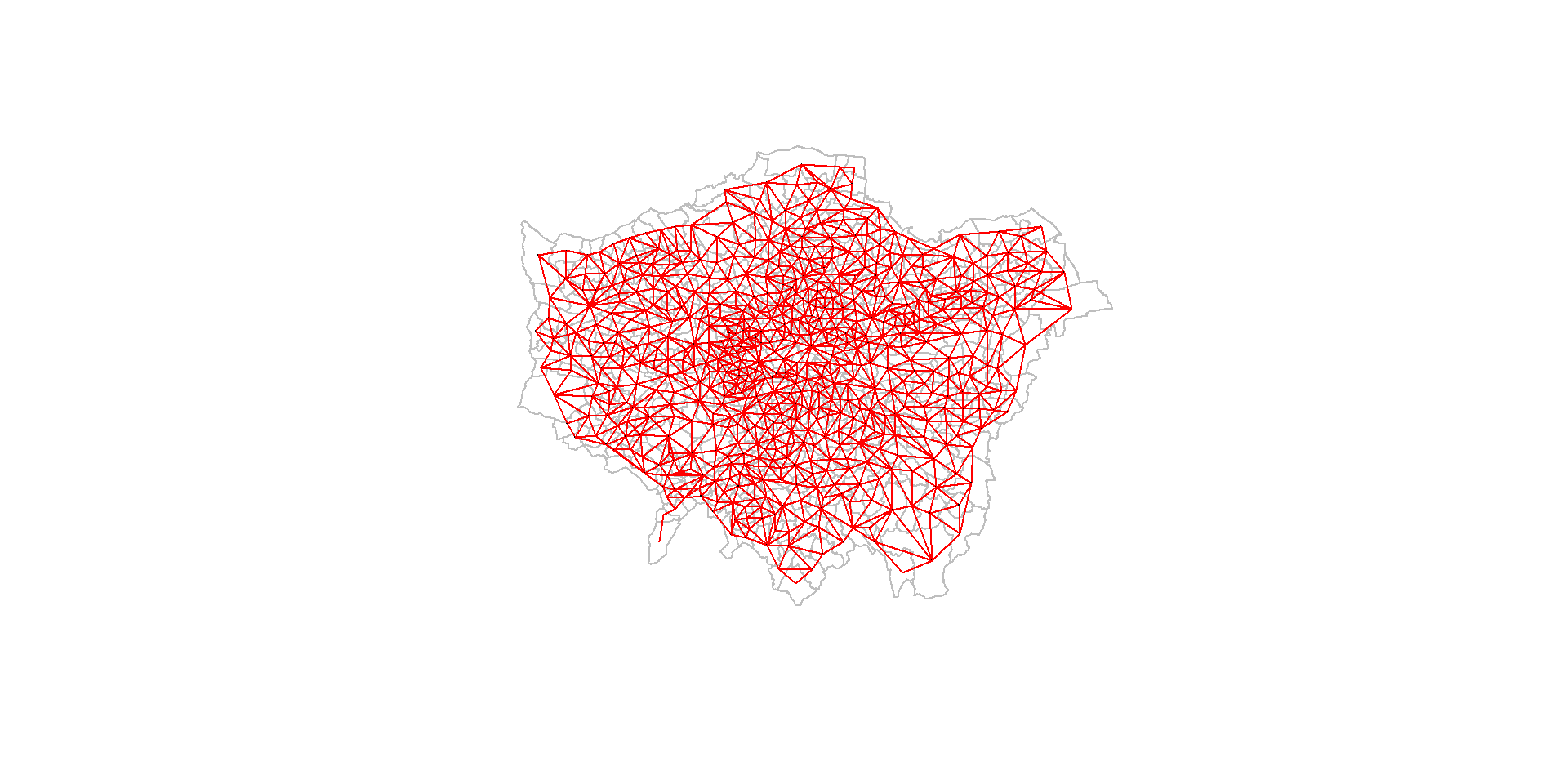

If we measure the distance from the centre (centroid) of one ward to another, then we might decide that the 1st, 2nd, 3rd, kth. closest wards are near, the others are far

These neighbour relationships can be stored in an \(n*n\) ‘spatial weights’ matrix

The

spdeppackage in R will calculate a range of spatial weights matrices given a set of geometries

Where? Relative - Exploring Near and Distant

- We can then decide to include the “k” nearest neighbours or exclude the rest

Where? Relative - Exploring Near and Distant

Other conceptions of near might include any contiguous ward with distant simply being those which are not contiguous

Near or distant could also be defined by some distance threshold

Analysis of ‘where’?

- Where in London do students perform best and worst in their post-16 exams?

Is there any pattern? Do better scores and worse scores appear to be clustered? How can we tell?

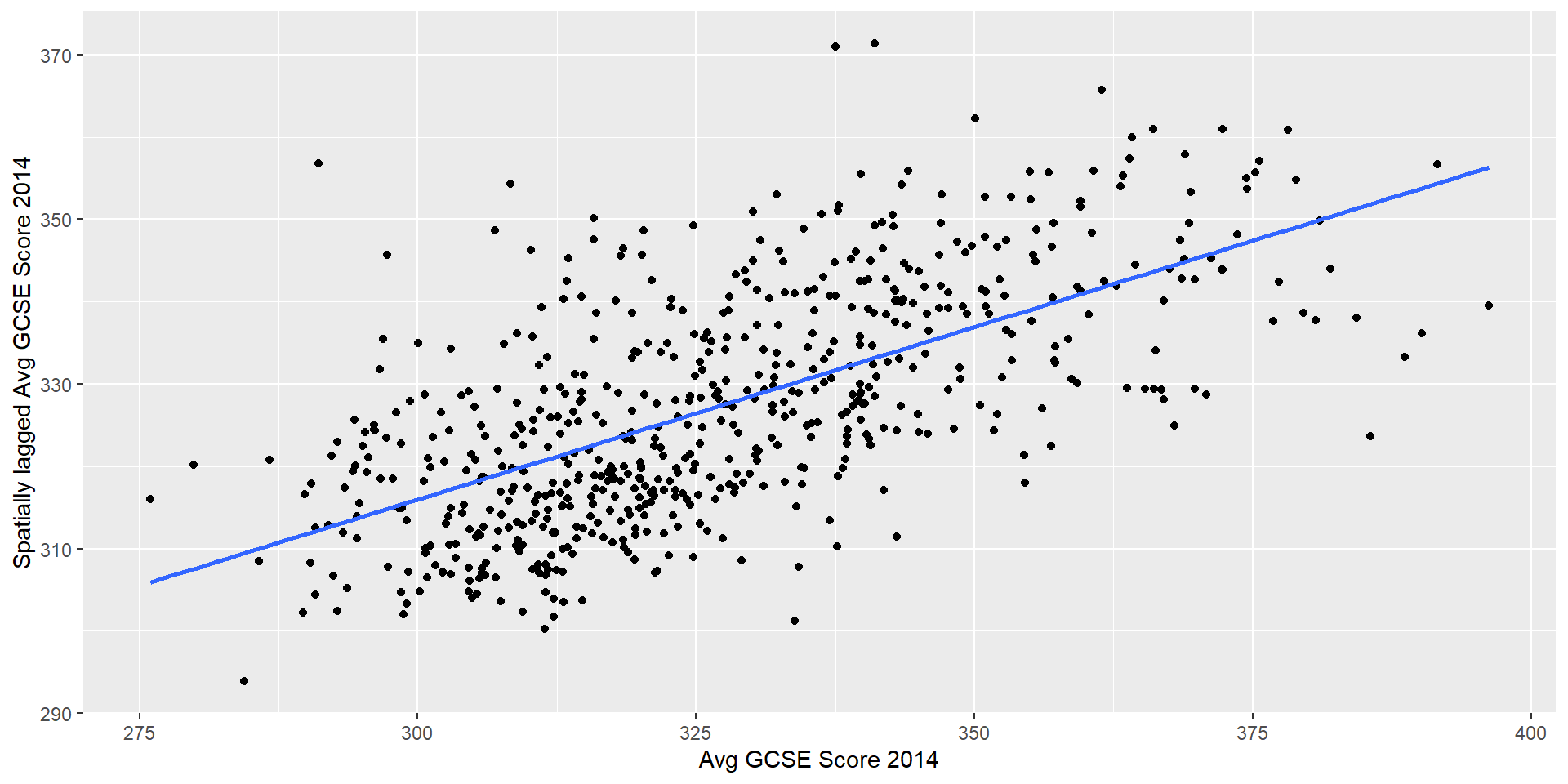

Spatial Autocorrelation

Spatial Autocorrelation - phenomenon of near things being more similar than distant things.

- Do neighbouring wards have more similar GCSE points scores than distant wards?

Can test for spatial autocorrelation by comparing the GCSE Scores in any given ward with the GCSE scores in neighbouring wards (however we choose to define our neighbours - k-nearest, those that are contiguous etc.)

Average value of GCSE scores in the neighbouring wards is known as the spatial lag of GSCE scores

Spatial Autocorrelation

(Intercept) average_gcse_capped_point_scores_2014

190.2624075 0.4190508

- If there is a linear correlation between the variable and its spatial lag (don’t ask me why the lag is the \(y\) variable in this case!), we can observe that values in near places do tend to cluster

Moran’s I

- Moran’s I is another name for the least-squares regression slope parameter when the variable is correlated with its spatial lag)

- Values range from +1 (perfect spatial autocorrelation) to -1 (perfect dispersal) with values close to 0 indicating no relationship

Moran I test under randomisation

data: LondonWardsMerged$average_gcse_capped_point_scores_2014

weights: nb2listw(LWard_nb)

Moran I statistic standard deviate = 17.785, p-value < 2.2e-16

alternative hypothesis: greater

sample estimates:

Moran I statistic Expectation Variance

0.4190507533 -0.0016025641 0.0005594495 Moran’s I

Moran’s I = 0.42

Moderate, positive spatial autocorrelation between average GCSE scores in London - some clustering of both low and high scores

Spatial Autocorrelation might be expected when distribution of schools overlaid and one realises that pupils from multiple neighbouring wards might attend the same school

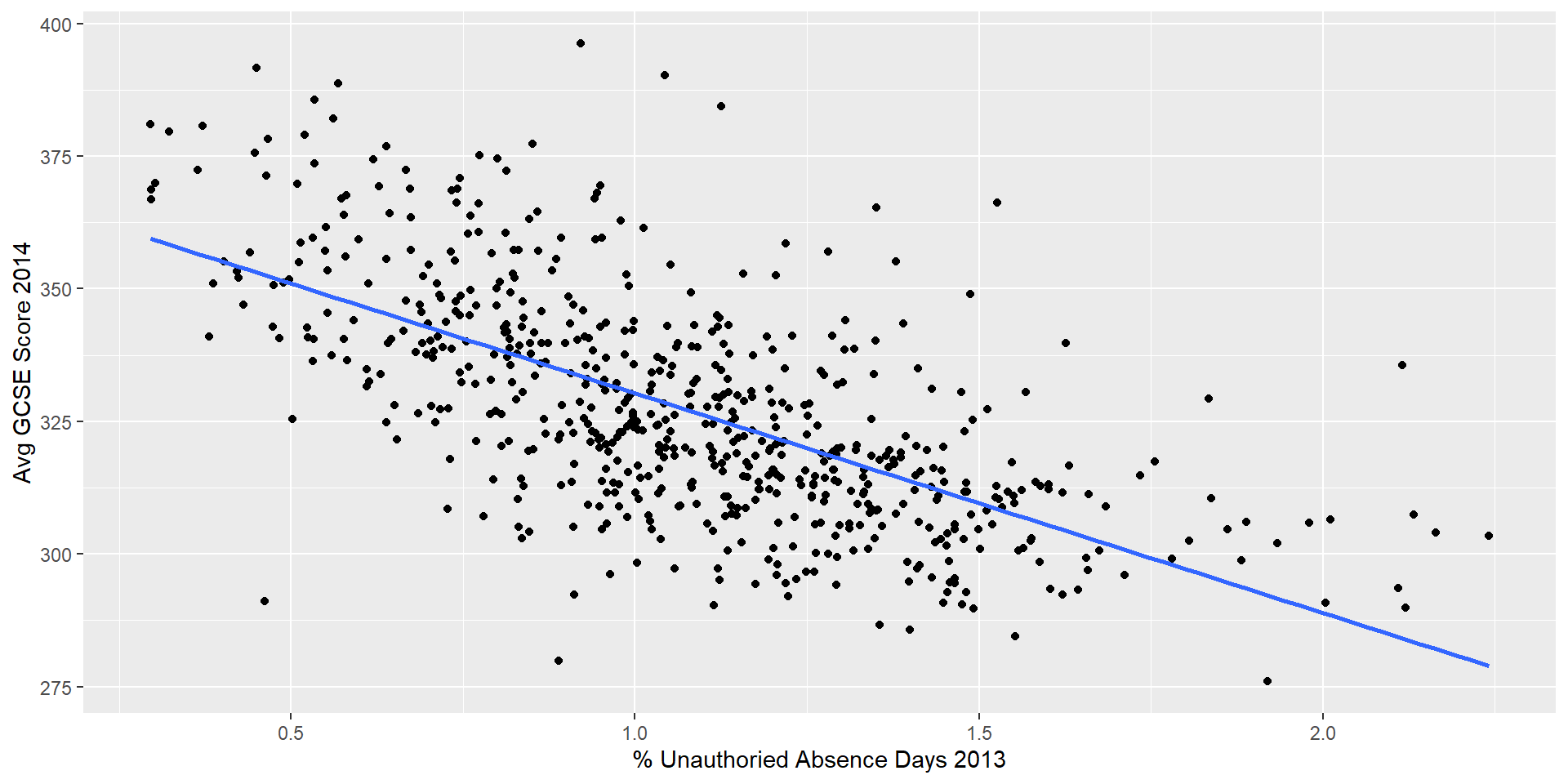

Explaining Spatial Patterns

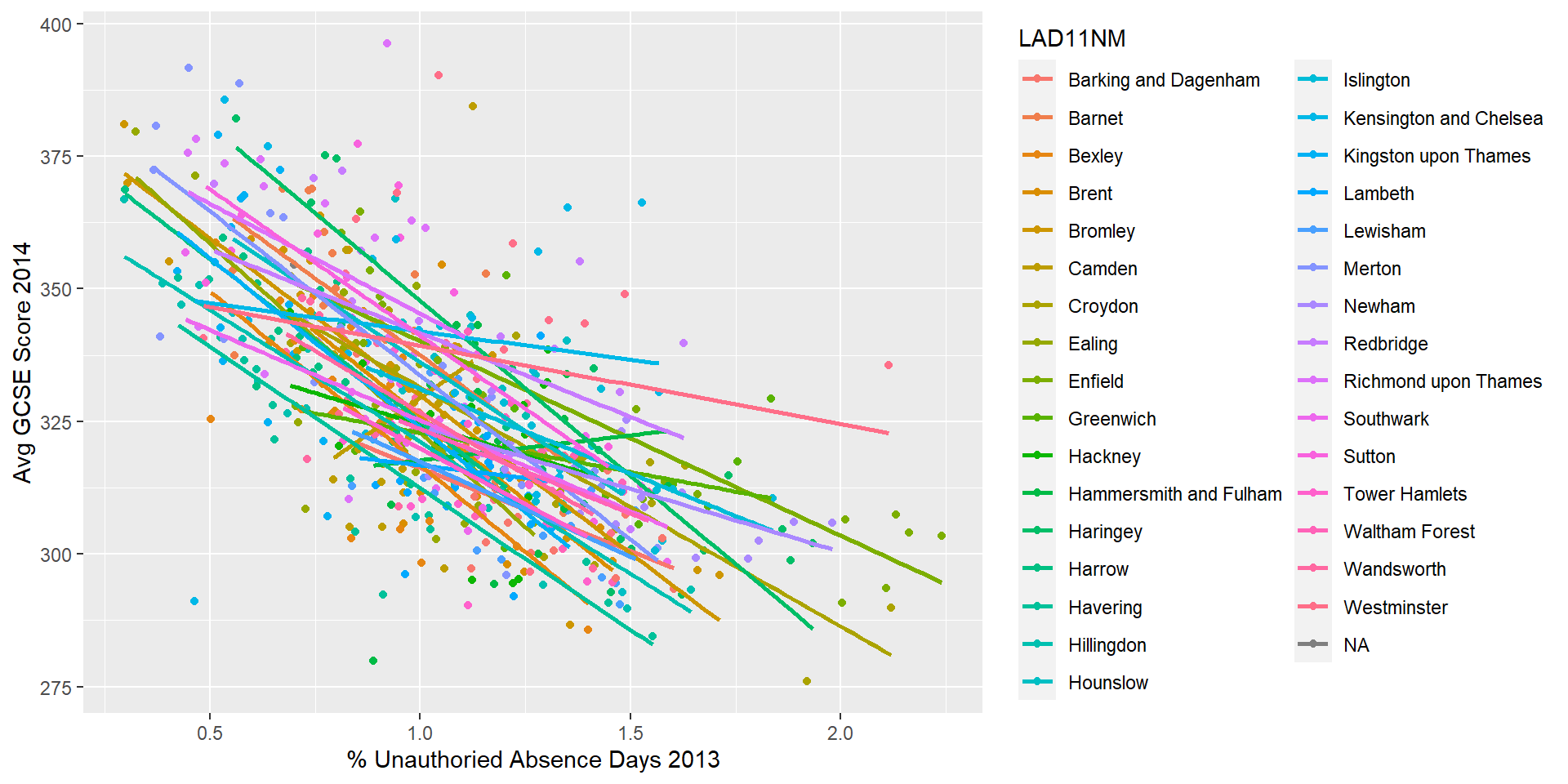

Having observed some spatial patterns in school exam performance in London, we might next want to explain these patterns, perhaps using another variable measured for the same spatial units.

Our own experience might tell us that missing class could negatively impact our ability to learn things in that class

Hypothesis: wards with higher rates of absence from school will tend to experience lower average exam grades

![]()

Explaining Spatial Patterns

(Intercept)

371.71500

unauthorised_absence_in_all_schools_percent_2013

-41.40264

Taking the whole of London, it would appear that there is a moderately strong, negative relationship between missing school and exam performance

For every 1% of additional school days missed, we might expect a decrease of -41 points in GCSE score.

But does this relationship hold true across all wards in the city?

Explaining Spatial Patterns

Moran’s I of GSCE scores means that we already know that the observations are probably not independent of each other (violating one assumption of regression)

Mapping the residual values from the regression model allows us to observe any spatial clustering in the errors

Clustering of residuals could also indicate a violation of the independence assumption of errors

Moran I test under randomisation

data: LondonWardsMerged$model1_resids

weights: nb2listw(LWard_nb)

Moran I statistic standard deviate = 12.183, p-value < 2.2e-16

alternative hypothesis: greater

sample estimates:

Moran I statistic Expectation Variance

0.2862894906 -0.0016025641 0.0005583971 Dealing with Spatial Patterns - Spatial Regression Models (the spatial lag model)

Call:

lagsarlm(formula = average_gcse_capped_point_scores_2014 ~ unauthorised_absence_in_all_schools_percent_2013,

data = LondonWardsMerged, listw = nb2listw(LWard_nb, style = "W"),

method = "eigen")

Residuals:

Min 1Q Median 3Q Max

-68.70402 -9.44615 -0.64207 8.53417 58.56788

Type: lag

Coefficients: (asymptotic standard errors)

Estimate Std. Error z value

(Intercept) 207.4009 15.0053 13.822

unauthorised_absence_in_all_schools_percent_2013 -30.7843 2.0792 -14.806

Pr(>|z|)

(Intercept) < 2.2e-16

unauthorised_absence_in_all_schools_percent_2013 < 2.2e-16

Rho: 0.46705, LR test value: 104.93, p-value: < 2.22e-16

Asymptotic standard error: 0.041738

z-value: 11.19, p-value: < 2.22e-16

Wald statistic: 125.22, p-value: < 2.22e-16

Log likelihood: -2581.93 for lag model

ML residual variance (sigma squared): 217.21, (sigma: 14.738)

Number of observations: 625

Number of parameters estimated: 4

AIC: 5171.9, (AIC for lm: 5274.8)

LM test for residual autocorrelation

test value: 3.0949, p-value: 0.078537One way of coping with spatial dependence in the dependent variable is to include the spatial lag of that variable as an independent explanatory variable

the

spatialregpackage in R allows us to easily incorporate a spatial lag of the dependent variable as an independent variable \(\rho\) (Rho) in a standard linear regression modelRunning the spatial lag model reveals that the spatial lag is statistically significant and has the effect of reducing the estimated impact of missing 1% of schools days from -42 points to -31 points.

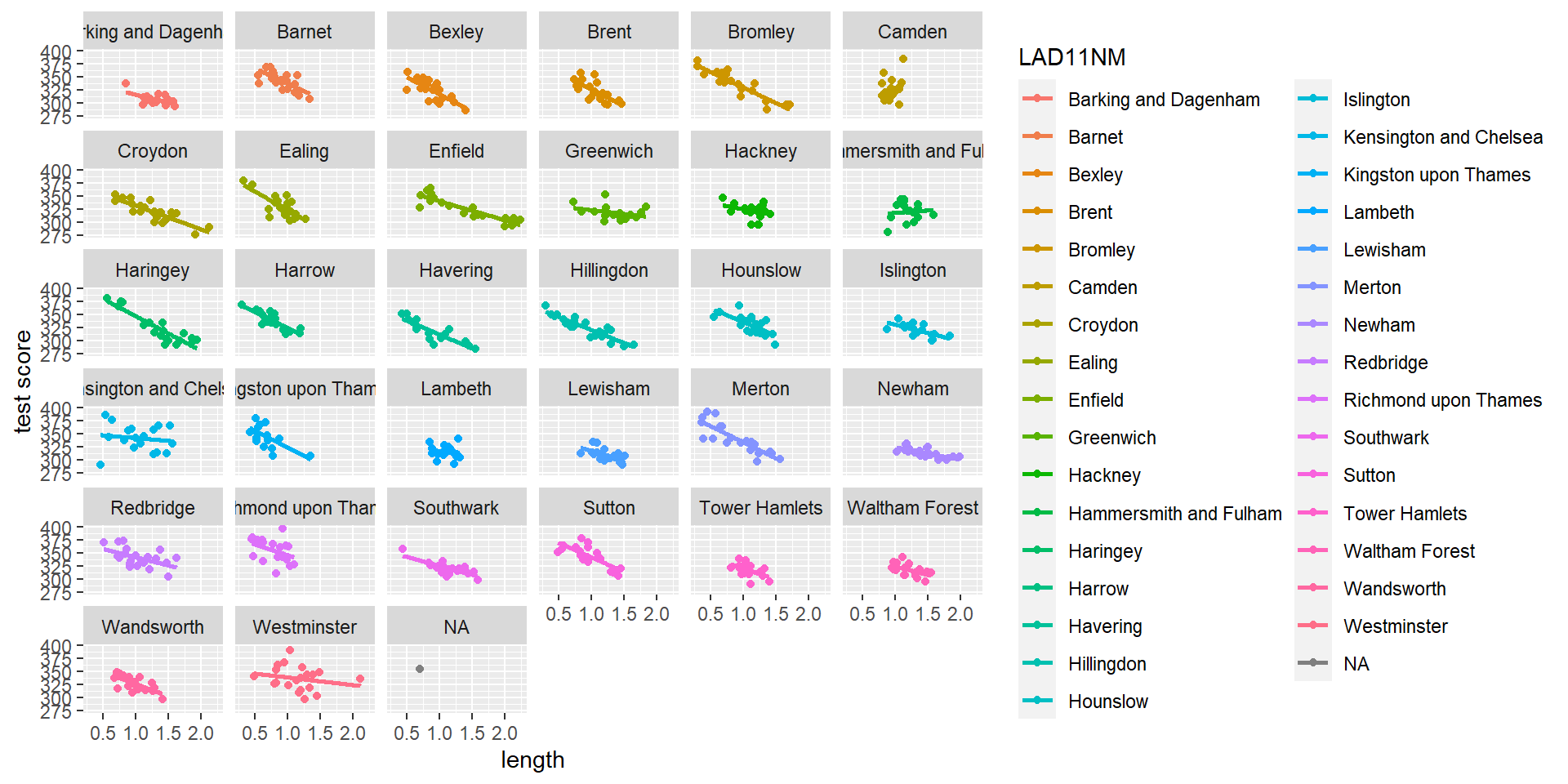

Dealing with Spatial Patterns - Spatial Non-Stationarity

One reason behind a clustering of residuals could be that the relationship between dependent and independent variables might not remain constant across space

In some parts of London, it could be that as unauthorised absence from school rises, exam grades also rise (as unlikely as that might be!).

Or, more plausibly, that in some parts of the city, absence has an even more pronounced negative effect than in others.

It’s also likely that the intercept values (the average value of GSCE rules, given no days of unauthorised absence) will be different in different parts of the city - some areas, on average, doing better than others

We can test for the presence of such phenomena by running a series of smaller, more localised regressions and comparing the coefficients that emerge

:focal(1205x459:1207x457)/origin-imgresizer.eurosport.com/2015/11/03/1725525-36508865-2560-1440.jpg)

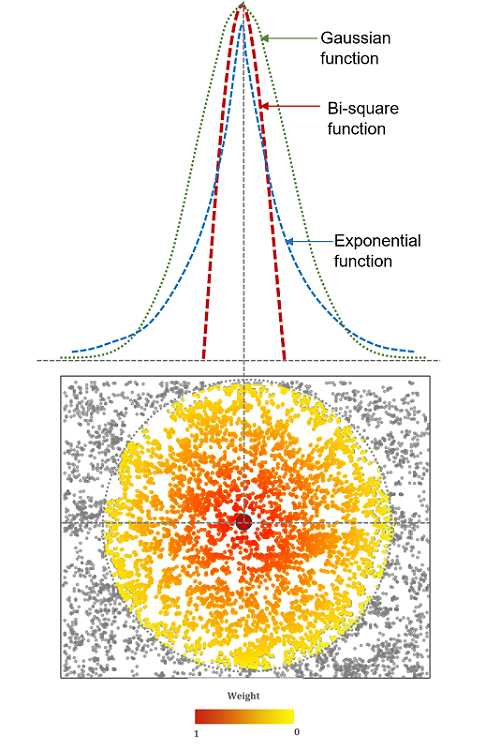

Geographically Weighted Regression

GWR is a method for systematically running a series of localised regression analyses across a study area, collecting coefficients and other diagnostics for an independent variable in each zone of interest.

Something similar can be achieved through spatial sub-setting - i.e. running analyses for groups of zones within a higher level geography

Geographically Weighted Regression

Geographically Weighted Regression

In a GWR analysis, kernel weighting functions of different bandwidths (diameters) and shapes are used to include and weight or exclude neighbouring observations

Adaptive weighting can be used to adjust the size of the kernel according to some threshold of observations

For every point in the dataset a regression is run including the values within the kernel (which, of course, can only be achieved effectively through understanding the coordinate reference system of the observations)

Geographically Weighted Regression

Plotting coefficient values for each ward reveals noticable non-stationarity in the relationship between absence and GSCE scores

In well-off central London boroughs (particularly Hammersmith and Fulham, Kensington and Chelsea and Camden) we see evidence that absence is positively related to GCSE performance

In some of the outer-London boroughs (Barnet, Sutton, Richmond etc.) the effect of missing school is even more severe than it is elsewhere in the city

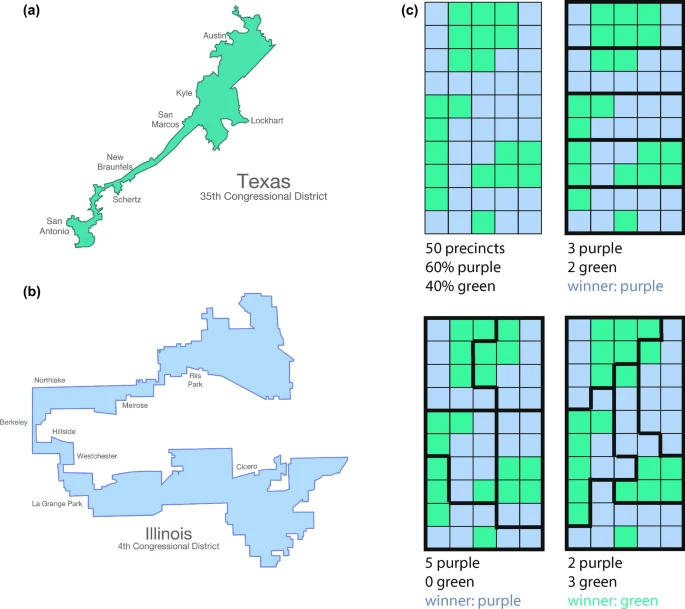

Scale and Shape - Modifiable Areal Units and Ecological Fallacies

Methods which accommodate space explicitly can help us better understand spatial phenomomena, but the arrangement of space can alter perceptions and the outcomes of analyses

The Modifiable Areal Unit Problem (MAUP) - popularised in the 1980s by Stan Openshaw - describes issues that relate to the shape, scale and aggregation of underlying phenomenon to artificial spatial units

Politicians have known about the issues of scale and aggregation for a long time and have used it to their advantage

The practice of Gerrymandering is widespread wherever there is a first-past-the-post electoral system and has been used to manipulate vote counts to influence election outcomes

Scale and Shape - Modifiable Areal Units and Ecological Fallacies

Related to the MAUP, the Ecological Fallacy describes a confusion between patterns revealed at one level of aggregation and the assumption that they apply either to individuals or lower levels of aggregation

The basic idea that just because a patterns of educational attainment are revealed at Borough level, they won’t necessarily translate down to neighbourhood levels

“Simpson’s Paradox” - a type of ecological fallacy where the statistical association or correlation between two variables at one level of aggregation disappears or reverses at another - think back to the Geographically Weighted Regression example from earlier

Conclusions

Knowing where something occurs underpins everything spatial data scientists do

Various conventions around how to locate something on the earth’s surface and store information about it have emerged

Near things are more related than distant things and being aware of this when analysing data with a spatial dimension is fundamental to carrying out a robust analysis

Accounting for spatial clustering in data can help analysts:

more correctly interpret relationships between variables

avoid making erroneous generalisations that do not apply in local contexts

be aware of potentially significant consequences in statistical outcomes that are the result of a particular arrangement of space